Few-shot learning in the era of Big Data

a literature review on perspectives and challenges

Keywords:

Machine Learning, Few-shot learning, Big Data, Small samples, Opinion surveyAbstract

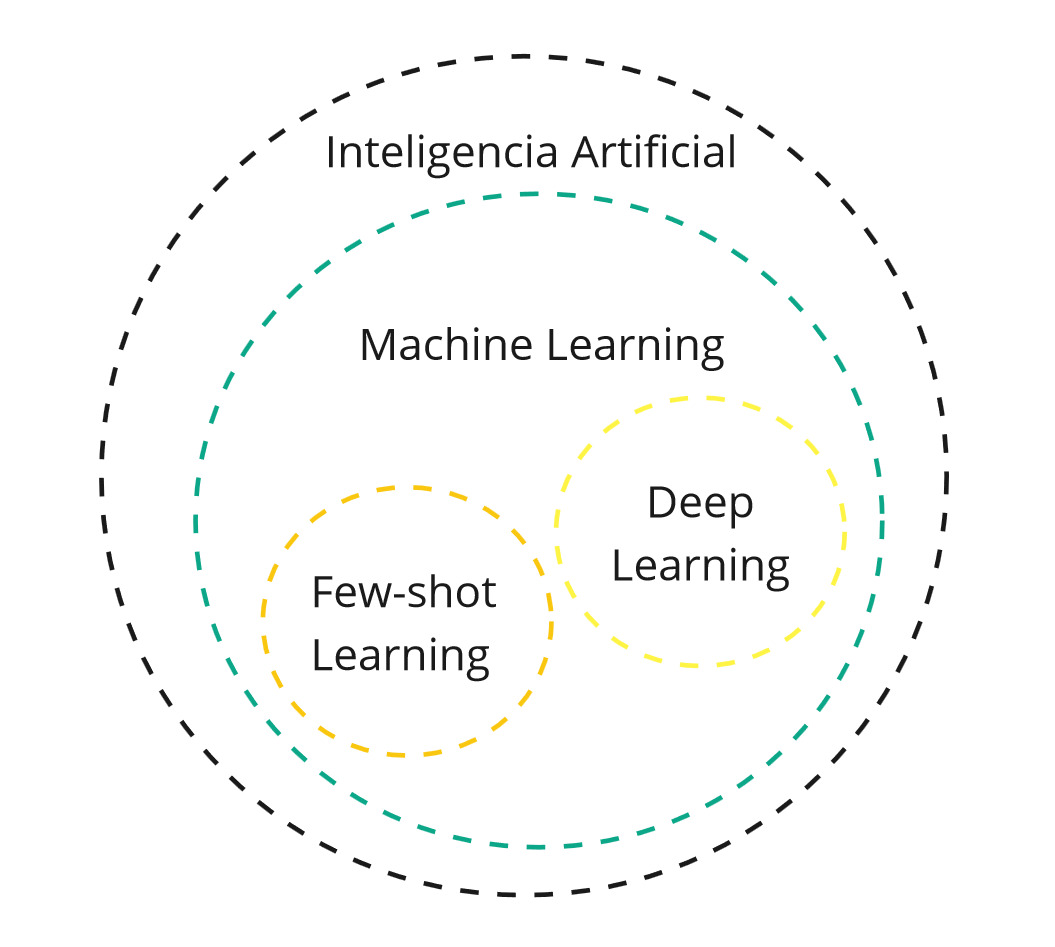

The advancement of Big Data has brought a large volume of data that has enabled the use of machine learning techniques for decision-making in different fields. However, the effectiveness of these models depends on the availability of large amounts of data, raising the challenge of dealing with learning from few samples. Learning from few samples is important in many applications when large volumes are not available, such as opinion surveys, due to the challenges of data collection, primarily due to lack of engagement and participation in questionnaires. This approach can facilitate or minimize the use of opinion surveys. However, there are challenges that need to be overcome to achieve accurate performance in few-shot learning tasks, such as the selection of relevant samples, the choice of appropriate training methods, among others. In light of this, we will discuss the perspectives and challenges of learning from few samples in the era of Big Data. A review of Few-shot learning techniques and their applications will be conducted as an alternative to deal with few samples. Reviewing these techniques and their application on limited datasets can provide valuable insights for improving machine learning models in different application domains.

References

FE-FEI, L. et al. A bayesian approach to unsupervised one-shot learning of object categories. In: IEEE. proceedings ninth IEEE international conference on computer vision. [S.l.], 2003. p. 1134–1141.

FINN, C.; OTHERS. Online meta-learning. In: PMLR. International Conference on Machine Learning. [S.l.], 2019. p. 1920–1930.

HOWARD, A. G.; ZHU, M.; CHEN, B.; KALENICHENKO, D.; WANG, W.; WEYAND, T.; ANDREETTO, M.; ADAM, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv preprint arXiv:1704.04861, 2017.

KANG, B.; LIU, Z.; WANG, X.; YU, F.; FENG, J.; DARRELL, T. Few-shot object detection via feature reweighting. In: ICCV. Proceedings of the IEEE/CVF International Conference on Computer Vision. [S.l.], 2019. p. 8420–8429.

KNAGG, O. Advances in Few-Shot Learning: A Guided Tour. 2022. Acessado em 31 de dezembro de 2023. Disponível em: https://towardsdatascience.com/advances-in-few-shot-learning-a-guided-tour-36bc10a68b77.

KOCH, G.; ZEMEL, R.; SALAKHUTDINOV, R.; OTHERS. Siamese neural networks for one-shot image recognition. In: ICML Deep Learning Workshop. [S.l.: s.n.], 2015. v. 2, p. 0.

KUNDU, R. Few-Shot Learning. 2022. Acessado em 31 de dezembro de 2023. Disponível em: https://blog.paperspace.com/few-shot-learning/.

LEAO, P. P. de S.; SANTOS, E. M. dos; PINTO, R. A.; EVANGELISTA, L. G. C. Detecção de pneumonia causada por covid-19 utilizando few-shot learning. In: SBC. Anais do XXII Simpósio Brasileiro de Computação Aplicada à Saúde. [S.l.], 2022. p. 391–400.

LU, J.; GONG, P.; YE, J.; ZHANG, C. Learning from very few samples: A survey. 2020. ArXiv preprint arXiv:2009.02653.

MCCULLOCH, W. S.; PITTS, W. A logical calculus of the ideas immanent in nervous activity. The bulletin of mathematical biophysics, Springer, v. 5, p. 115–133, 1943.

MILLER, E. G.; MATSAKIS, N. E.; VIOLA, P. A. Learning from one example through shared densities on transforms. In: IEEE. Proceedings IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2000). [S.l.], 2000. p. 464–471.

MONARD, M. C.; BARANAUSKAS, J. A. Conceitos sobre aprendizado de m´aquina. Sistemas inteligentes-Fundamentos e aplica¸c˜oes, v. 1, n. 1, p. 32, 2003.

PENG, B.; OTHERS. Few-shot natural language generation for task-oriented dialog. 2020. ArXiv preprint arXiv:2002.12328.

RAUTENBERG, S.; CARMO, P. R. V. D. Big data e ciência de dados: complementariedade conceitual no processo de tomada de decis˜ao. Brazilian Journal of Information Science, v. 13, n. 1, p. 56–67, 2019.

RUSSELL, S.; NORVIG, P. Inteligência Artificial. 3. ed. S˜ao Paulo: Elsevier, 2013.

SANCHES, M. K. Aprendizado de máquina semi-supervisionado: proposta de um algoritmo para rotular exemplos a partir de poucos exemplos rotulados. Tese (Doutorado) — Universidade de São Paulo, 2003.

SNELL, J.; SWERSKY, K.; ZEMEL, R. Prototypical networks for few-shot learning. In: Advance in Neural Information Pro cessing Systems. [S.l.]: Curran Associates, Inc., 2017. v. 30. Disponível em: https://proceedings.neurips.cc/paper/2017/file/cb8da6767461f2812ae4290eac7cbc42-Paper.pdf.

VINYALS, O.; BLUNDELL, C.; LILLICRAP, T.; WIERSTRA, D.; OTHERS. Matching networks for one shot learning. Advances in neural information processing systems,

v. 29, p. 3630–3638, 2016.

WANG, Y.; YAO, Q.; KWOK, J. T.; NI, L. M. Generalizing from a few examples: A survey on few-shot learning. ACM computing surveys (csur), ACM New York, NY, USA, v. 53, n. 3, p. 1–34, 2020.

ZHENG, Y. et al. Principal characteristic networks for few-shot learning. Journal of Visual Communication and Image Representation, v. 59, p. 563–573, 2019.

Downloads

Published

How to Cite

Issue

Section

License

Proposta de Política para Periódicos de Acesso Livre

Autores que publicam nesta revista concordam com os seguintes termos:

- Autores mantém os direitos autorais e concedem à revista o direito de primeira publicação, com o trabalho simultaneamente licenciado sob a Licença Creative Commons Attribution que permite o compartilhamento do trabalho com reconhecimento da autoria e publicação inicial nesta revista.

- Autores têm autorização para assumir contratos adicionais separadamente, para distribuição não-exclusiva da versão do trabalho publicada nesta revista (ex.: publicar em repositório institucional ou como capítulo de livro), com reconhecimento de autoria e publicação inicial nesta revista.

- Autores têm permissão e são estimulados a publicar e distribuir seu trabalho online (ex.: em repositórios institucionais ou na sua página pessoal) a qualquer ponto antes ou durante o processo editorial, já que isso pode gerar alterações produtivas, bem como aumentar o impacto e a citação do trabalho publicado (Veja O Efeito do Acesso Livre).